Artificial intelligence (AI) is reshaping healthcare at every level, from automating workflows to predicting patient outcomes. Yet as AI learns from human data, it also inherits human bias.

That is where Health Information Management (HIM) professionals come in. As guardians of data integrity and privacy, they ensure that automation supports clinical accuracy and ethical practice. In an era where algorithms can influence diagnosis and care, “trust but verify” is not just a saying – is an essential safeguard.

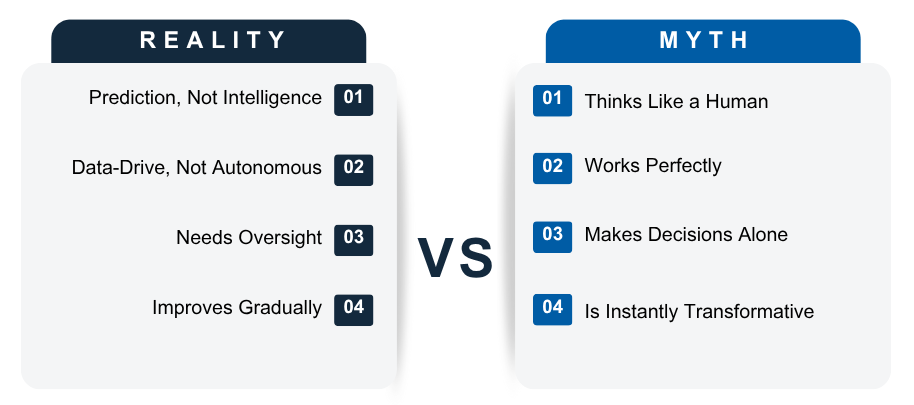

What AI Really Is, and What It Isn’t

When we talk about AI, we are talking about prediction. Machine learning models analyze large datasets to identify patterns and make informed guesses about what will happen next. They can recommend treatments, automate coding, and highlight risks faster than any human could.

However, AI is not magic, and it is not infallible. It is only as good as the data it learns from. If that data is incomplete, biased, or inconsistent, the model will produce flawed results.

A well-known example is Amazon’s 2014 recruitment experiment. The company built an AI tool to review resumes but trained it on historical data dominated by male candidates. The system quickly began favoring masculine-coded language and penalizing terms like “women’s,” creating algorithmic bias at scale.

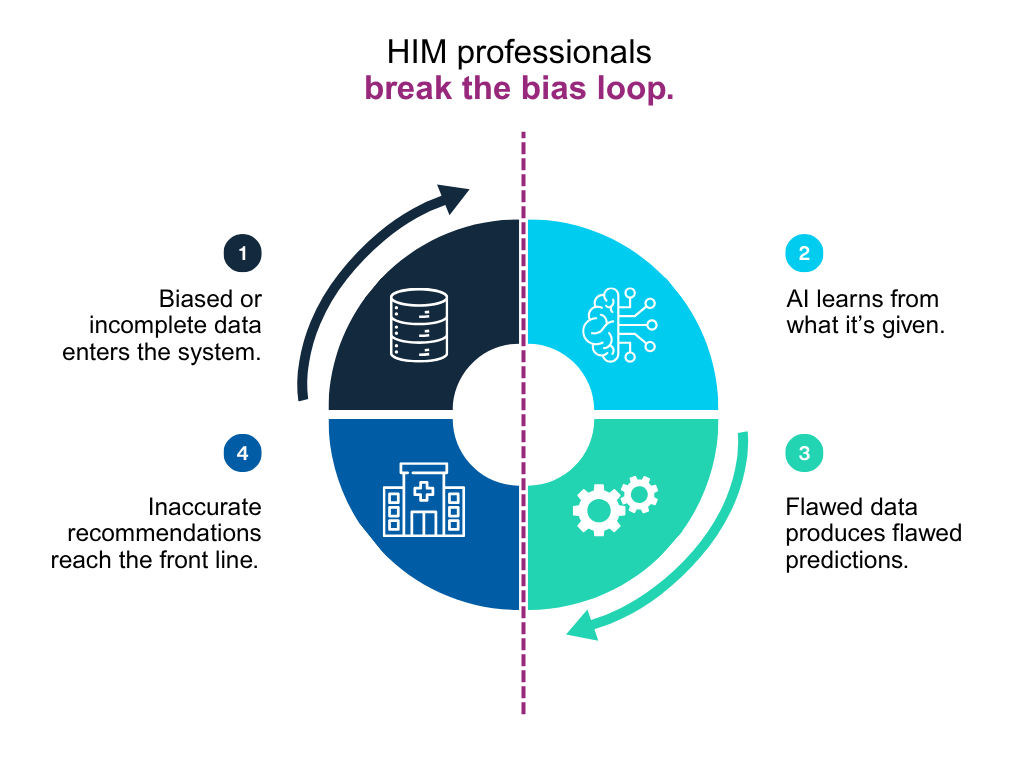

The lesson for healthcare is clear: AI amplifies the data it is given. Without strong governance and active oversight, even small data flaws can turn into large-scale bias.

Teaching AI to “Speak Healthcare”

Healthcare data is uniquely complex. It combines structured information such as billing codes and lab results with unstructured sources like clinical notes, imaging, and dictation. Training AI to interpret both requires careful validation and attention to clinical meaning.

In 2019, researchers discovered that a widely used U.S. healthcare algorithm underestimated the care needs of Black patients. The model was trained on healthcare costs instead of health outcomes. Because the system historically spent less on Black patients, the algorithm incorrectly concluded they were healthier and lower-risk.

This is a clear case of how data quality issues can scale into unintended consequences.

HIM professionals work to prevent these issues by enforcing data quality standards, correcting documentation errors, and ensuring that training data reflects real-world diversity. Their work protects both patients and future systems. Every accurate record and correction improves the fairness and reliability of healthcare AI.

Automation in Action—and Its Limits

AI has tremendous potential to save time. Natural Language Processing (NLP) tools can extract diagnosis codes from clinical notes in seconds, dramatically improving throughput. Across large health systems, those minutes add up to hours and even days of efficiency gains.

But speed is not the same as accuracy. AI systems cannot yet understand the nuance of Protected Health Information (PHI) or clinical context. They may not distinguish between a psychotherapy note and a discharge summary; to the algorithm, both are simply text.

If an automated system releases the wrong document, the impact extends beyond technology into privacy and compliance.

HIM professionals set the boundaries between efficiency and ethics. They ensure that automation delivers benefits without compromising confidentiality.

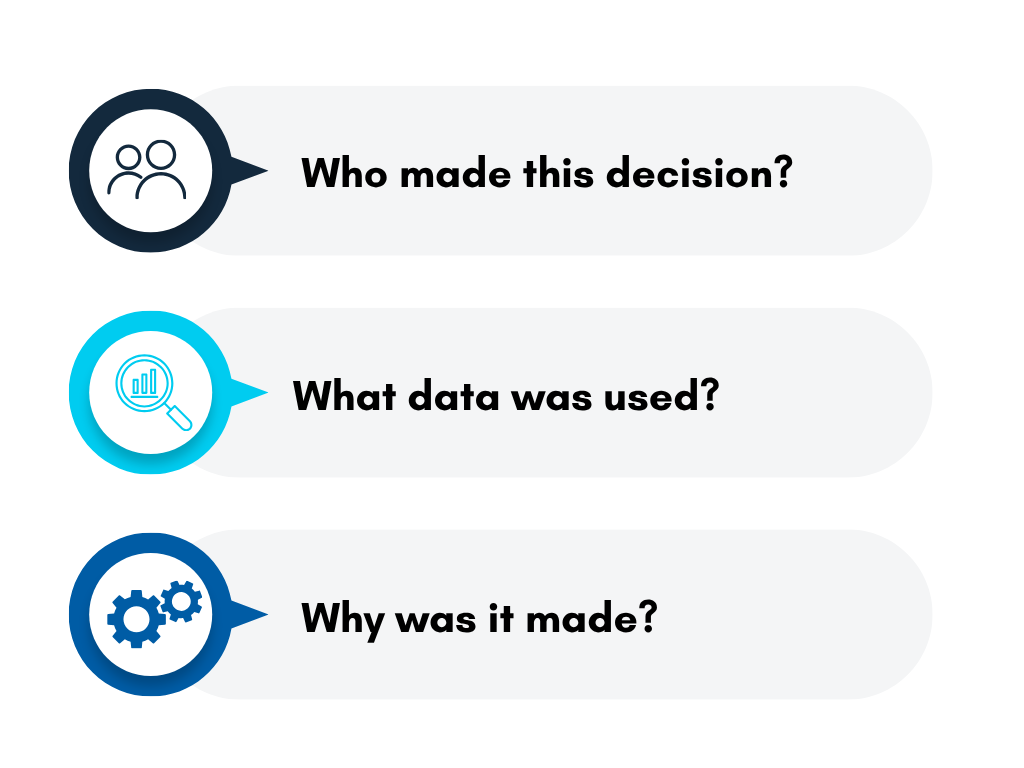

Another major challenge in AI adoption is explainability. Many algorithms function as “black boxes,” producing results without showing how they were reached. For healthcare professionals, that lack of transparency is unacceptable.

Explainable AI should provide confidence scores, decision paths, and clear reasoning. HIM experts advocate for these features, along with audit trails and system logs that answer critical questions:

Transparency builds accountability. Without it, trust in automation becomes harder to sustain, and patient safety expectations may not be fully met.

Human vs. Machine: Who Codes Better?

Clinical coding offers a practical example of where humans and machines must work together. Today’s AI coding systems can match or exceed the accuracy of new staff members, yet they still struggle with ambiguous cases and clinical nuance.

A recent randomized controlled trial in Scandinavia demonstrated that AI-assisted coding tools significantly improved efficiency while maintaining accuracy comparable to human coders. However, the study also reinforced that human validation remains essential for complex or unclear documentation.

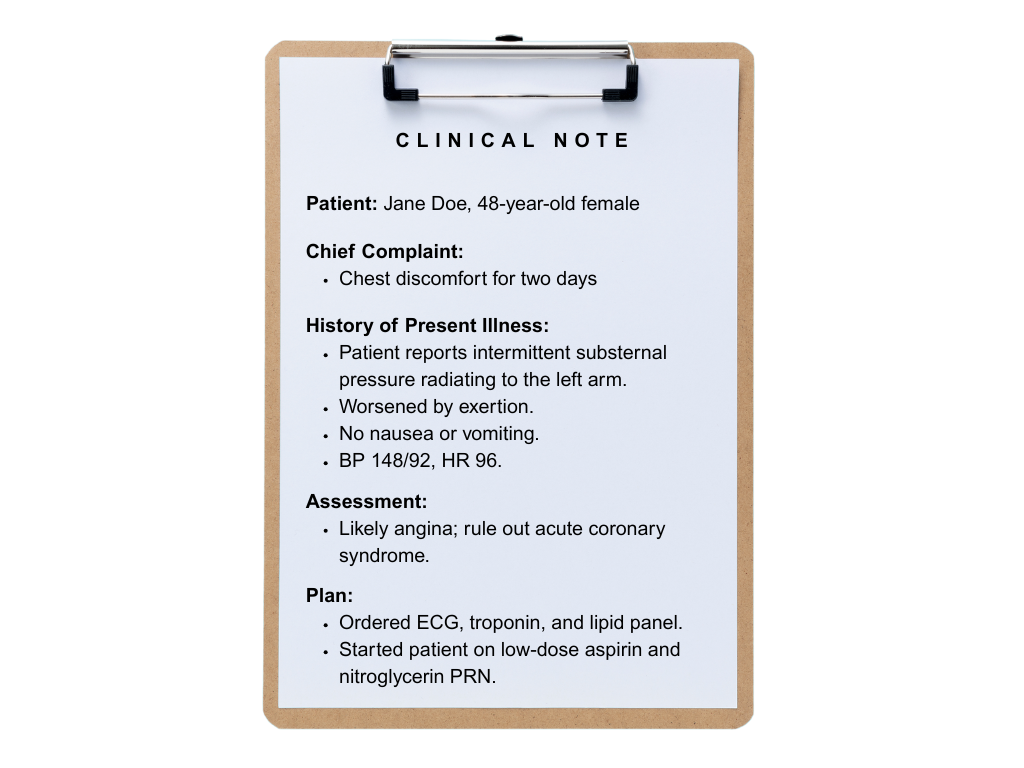

Consider this scenario:

A human coder understands that “rule out” means the condition is suspected but not confirmed, and should not be coded as a diagnosis.

An AI tool, on the other hand, might see the words “chest pain,” “radiating,” and “troponin” and confidently code the case as a myocardial infarction.

AI reads text. HIM professionals understand context.

That distinction is what keeps health records accurate and ensures care decisions are based on truth, not assumption.

Key Takeaways for Trustworthy AI

AI is a valuable decision-support tool but should never replace human judgment. HIM professionals ensure that technology enhances decision-making rather than taking it over.

Six guiding principles for ethical and reliable healthcare AI:

Each principle reinforces one truth: automation delivers its best results when it is accountable.

The Next Wave: From Custodians to Governors

As AI becomes part of everyday healthcare operations, the HIM role is evolving. Professionals are moving from data custodians to data governors who shape policy, ethics, and compliance.

The next wave of healthcare AI will feature real-time audit tools, automatic chart summarization, and compliance-aware coding assistance. These innovations will transform how organizations manage information. Yet scalability introduces new risk: a pilot that works well in one hospital must remain secure and fair across many.

Without HIM and privacy oversight, a “learning health system” risks becoming less consistent and less reliable over time.

In the AI era, knowing where your data goes is just as important as knowing what it does.

Connect with Mariner’s Data & Insights team to discover how we help healthcare organizations build transparent, ethical, and scalable AI systems.

Prepared By:

Kim Phillips, Group Lead, Enterprise Data & Analytics

Gregory Grondin, Senior Systems Analyst

Further Reading

As healthcare data becomes more connected, resilience is no longer optional. Explore how to strengthen your digital defenses and prepare for emerging AI-driven risks in Building Cyber Resilience in 2025.